AI in Healthcare: Raise the Evidence Bar Now or Be Forced to Later

Summary

In 2012, experts predicted that artificial Intelligence (AI) would eventually replace as much as 80% of current clinical practice by physicians. While AI has certainly expanded in healthcare—especially during the COVID-19 pandemic—the last few years have included some eventful setbacks in the use of AI across several sectors of the economy, including healthcare.Most of these problems are related to AI’s reliance on deep-learning algorithms that find patterns in what is known as training data, or source data that may be inherently inconsistent or biased. Another major setback is the slower than hoped for rate of EHR integration. However, what we are learning as an industry, what has been espoused by experts, and what has also come to the attention of regulators like the Federal Trade Commission (FTC) is that some of these data models on which AI relies may be biased. These biases could exacerbate existing disparities experienced by different patient and consumer communities. Recent communications and actions by the FTC suggest that the regulator is turning its attention to AI use in healthcare, and this may have considerable implications for stakeholders engaging in digital health reliant on AI.

FTC’s Communication on Misuse of AI

The FTC recently published an unannounced blog post by one of its leading attorneys indicating that it will be taking on a more active regulatory role on biased AI use across the economy. Further, in recent comments by FTC Commissioner Rohit Chopra, the signals are indicative of this pivot to pursuing regulation of firms with software offerings reliant on AI. Historically, the FTC has not been as active in regulating the technology sector, but given recent actions against large Silicon Valley firms such as Google and Facebook, their passive days may be behind them.

In summary, the regulator is signaling its interest in pursuing the regulation of AI-powered technologies that show evidence of some of the following issues:

- Foundation or “training” data used to develop the machine learning that is missing pertinent information from particular populations

- Discriminatory outcomes in well-intentioned algorithms that may negatively impact specific populations more than others

- Lack of transparency into the algorithms developed for implementation

- Overpromising on what algorithms can actually deliver to customers

- Misleading information on the source of data (e.g., on how a developer or software provider sources the data powering the AI)

- Causing more harm than good that is not reasonably avoidable by consumers

- Privacy issues with sensitive data and high risk of data breaches

The FTC provided examples of these issues in its communication and—more importantly—ended the post with “hold yourself accountable—or be ready for the FTC to do it for you.” This very direct language indicates that the FTC is serious about this issue, and software vendors should consider their AI business models and strategies to limit the issues outlined above to avoid major setbacks.

AI Biases in Healthcare

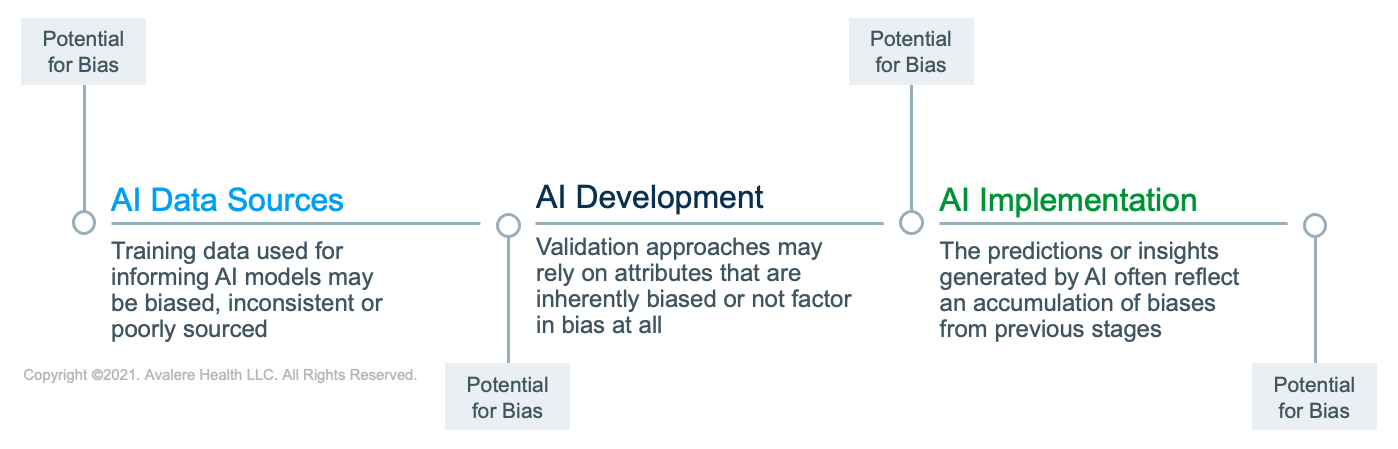

For healthcare specifically, the data used to power algorithms that form the foundation of many digital health products claiming to facilitate clinical and business decision-making often reflect the real world. We know that the real world is riddled with inequities across various demographic characteristics, including race, gender, and socioeconomic status. Problems with AI in healthcare are often attributed to biases throughout the AI development and use cycle, including in the data source, during data preparation, and in implementation (Figure 1). Major ethical implications are also present in the designed intent of AI in healthcare.

Bias in Data Sources

Poorly sourced, biased, or inconsistent datasets may ultimately lead to unintended consequences with software implementation. The data collection process established for the purpose of developing deep-learning algorithms may have inherent biases. With respect to collection, if the source data do not fully represent the populations in which the AI will be used, it may not work effectively and, moreover, may disproportionately impact excluded populations and benefit those already favored prior to AI implementation.

One major example in recent years is the use of healthcare risk-prediction algorithms based on biased data that rely on predicting healthcare costs rather than illness. Unfortunately, using costs as a proxy to illness severity may not consider unequal access to care by Black patients in comparison to White patients.

Bias in Data Preparation for Model Development

Bias may also be introduced in the preparation of data where specific attributes may be selected as primary drivers for the AI software to support prediction accuracy. If those attributes are already biased or unequal in the real world, training the AI on these biased attributes may exacerbate an unfair outcome of the model’s use.

Bias in AI Model Validation and Implementation

Approaches for AI data model validation that may also perpetuate biases include those that do not factor in identifying biases in their method, lack social context in an attempt at generalizability across multiple uses or contexts, or have a difficulty framing “fairness” in mathematical terms (given that AI is solely dependent on computation to function).

Correcting for AI bias is often difficult. For example, AI-generated inferences are often nuanced and may be non-representative of certain aspects of a population. However, more methodological and thoughtful development approaches that consider the increased risks of AI use in healthcare may mitigate some of these consequences.

What Can Be Done to Close The Gaps

While bias in AI is uniquely challenging, some strategies are available to support bias mitigation per the experts in AI research. These are some of the solutions that have been proposed throughout the AI development, testing, and implementation phases:

- Building more representative and comprehensive data sets that accurately reflect the populations in which the AI will be deployed and acknowledging that AI developed in 1 context will not easily translate to other contexts

- Develop AI-centric methods of AI algorithm validation to detect data biases that that might begin in the dataset used for model development

- Consider using algorithms to identify existing disparities in the data sets being used to train deep-learning algorithms to understand how disparities are represented in the data to eliminate the effect of these biases

- Improve transparency in the algorithms behind AI-powered offerings to allow providers to discern possible biases and provide feedback to developers for incremental improvements

- Consider the intention behind deploying AI; if it is deployed with the goal of saving money, it is important to characterize how that will impact treatment decisions on especially vulnerable populations

- Consider diversifying the perspectives and experiences being used to inform AI development (e.g., involve the communities that will be impacted by the AI solutions)

- Improve ethics guidelines to ensure machine learning and AI are systems in which physicians are required to be educated to better adjust traditional responsibility physician’s have over their patients for the new age of AI

Implications for Healthcare Stakeholders

Given the unlocking of data siloes thanks to improved data sharing and interoperability, as well as the exponential growth of AI development and use in healthcare, considerable implications exist for all major stakeholder sectors. Importantly, major advances in healthcare are already taking advantage of AI-powered technology that may face new regulations and oversight, including:

- Precision medicine (complex algorithms, digital health and omics-based tests)

- Imaging (medical image recognition, diagnostics, surgical applications)

- Risk-prediction (targeting populations and prioritizing services, risk mitigation)

- Wearables and remote patient monitoring

- Clinical research and drug discovery

Ultimately, the concerns regarding evidence, data bias, and privacy issues with AI in healthcare will impact strategies being implemented by a cross-section of healthcare stakeholders. For instance, major considerations exist for developers in relation to an expansion of regulation in this space by entities such as the FTC, which will seek to ensure that AI products conform to ethical practices and meet minimum evidentiary thresholds. For providers and their patients, the use of AI in healthcare may have considerable implications for patient care. While there is promising evidence of its benefits, identifying the risks associated with AI early on and improving appropriate use could reduce adverse outcomes in the long run. Further, both public and private payers will need to establish appropriateness and evidentiary criteria for AI-powered digital therapeutics and other digital health offerings as part of their coverage decisions in this space.

Despite significant growth in this sector, AI’s role as a dominant component of the industry is not right around the corner. However, the ongoing COVID-19 pandemic has accelerated its adoption as exemplified by a rise in use of telediagnosis, remote monitoring, and tele-assessment. This accelerated growth may require relevant stakeholders to consider and confront unintended consequences in their AI in healthcare strategies.

To receive Avalere updates, connect with us.